How many jelly beans in a jar? Or candy pieces? Or odd shaped Lego pieces of differing size? What if the container is oddly-shaped? Or we have multiple jars?

I always enjoy a good estimation challenge and find it difficult to pass up a chance to “make a guess and win it all!”. So what are some good strategies?

You can always spend a lot of time and try to count as many as you can see, then apply some math. This works well if you are dealing with a well defined shape, like jelly beans, and know something about the container (you can stuff about 900-950 “regular” sized jelly beans in a gallon jar). What happens if you have oddly shaped containers, or you have some other objects mixed in? What happens if you are dealing with an assortment of different candies and chocolates? (Which, in my opinion, are better winnings.)

The following are some strategies for increasing your odds. I believe there are some applications to business and management, as estimating is something we do more often than we think.

1. Do NOT look at others’ guesses before making a guess. Doing so may inadvertently set an “anchor” for your guess. In any kind of estimation, you want to avoid “bad” anchors. Chances are, you are not dealing with pros anyway.

2. Plan on spending a little bit of time. Not “take out a pencil and calculate the definite integral of 3D space” time, but more than 5 seconds. Often times, we cheat ourselves of using our information and instinct (both important factors in estimating) and give up too fast. What I have seen people do is this: they try some method, like counting the whole pieces they can see. Then they realize that the method does NOT lead to a good estimate. “If I can count ~50 whole pieces, and about ~40 half pieces, how am I going to use that info?”. Then they give up.

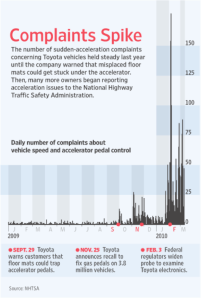

An important lesson is this: sometimes we think we need very specific data (how many whole pieces can I count), but when you actually have that data, we don’t know how to use it or what it really means. Data is always important, but don’t be fooled into thinking that data = answer.

3a. Having said that, do some counting. Cut up the jar into sections, like in halves or thirds. Take special care if the jar is tapered. Just get to some manageable subpart, then see what you can count. Do a gut-feel estimate based on how you think things are arranged inside. Pick up the jar, feel the weight.

3b. Then start over. This is the mistake that many people make in estimating: they only do it once, and only using one method. We bias ourselves into thinking whatever method we first start with is the superior method. This is what clouds our judgment for stuff like this. So, take another “fresh” estimate, maybe working from counting what you can see from the bottom. Maybe give yourself a break between the two estimates. Whatever you do, you MUST not bias your second estimate with your first. Do NOT look at others’ guesses while you come up with this second estimate.

4. Then come up with something between these two numbers. Remember that number. Then walk away. That’s right. Unless the time is running out, just walk away. Come back later.

5. When it’s time for the guessing to be finished, come back and THEN take a look at the others’ guesses. Here’s the trick: tweak your guess by using the others’ guesses to be near the midpoint between two adjacent guesses. For example, if you are thinking 1200, and someone has 1150 and another person has 1210, change your guess to be closer to 1150, such as 1180.

6. When they reveal the answer, make a note of whether you guessed too high or too low. Then think about what influenced your bias. Were you surprised at how heavy the jar was, and then guessed too high a number? Did you actually think about how awful it would be to eat the whole jar, and then guessed too low a number?

7. Look forward to the next guessing opportunity to get better at this.

It’s not a surprise to those who have read other posts on this site, but the two common themes are:

1. Try to unbias yourself as much as possible, or in reality, since you can’t completely unbias yourself, at least understand what biases are at play.

2. Refine, learn and get better.

I saw

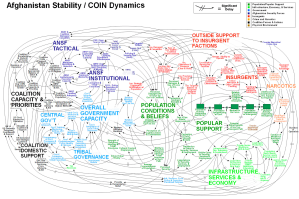

I saw  We are visual people. We recall past events by the mental picture we see (or create). Words are powerful and can be precise, of course, but visual images can often leave very strong and lasting impressions in our minds.

We are visual people. We recall past events by the mental picture we see (or create). Words are powerful and can be precise, of course, but visual images can often leave very strong and lasting impressions in our minds.